PCIe 4.0 and beyond

As the most popular consumer SSD from factor – m.2 – is limited to using four lanes of PCIe, the only way to increase speeds while retaining compatibility with the existing solutions is to increase the per-lane bandwidth.

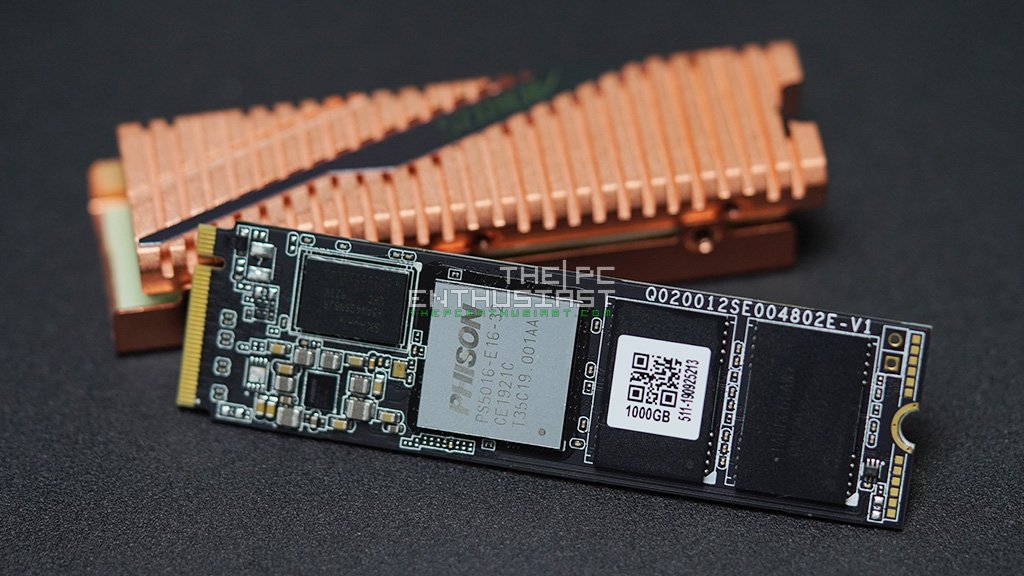

This time around, breaking traditions, Samsung wasn’t the first to announce a PCIe 4.0 drive. The first PCIe 4.0 compatible controller was the Phison E1651 in Q1 2019, which didn’t quite utilize all of the extra bandwidth, but it clearly went well beyond the limits of the PCIe 3.0 spec. It utilized two ARM cores, along with two proprietary CO-X processor cores to handle the 8 NAND channels.

The first manufacturer to release an actual drive was Gigabyte, utilizing the aforementioned Phison E16 controller, only half a year later in Q3 201952. It was rated at 5/4.4GB/s and 750k/700k IOPS53. The controller was paired with the usual 1GB of DDR4 RAM per TB of flash, which was in this case 96-layer Toshiba BiCS 3D TLC NAND.

While some motherboards had integrated heatsinks, it wasn’t a must have in the PCIe 3.0-era. However, with the higher speeds of PCIe 4.0 drives and the extra heat they brought as a byproduct, they were necessary to maintain that performance for more than a couple of seconds. Bundled heatsinks became very common, with some drives being sold in two variants, with and without heatsinks.

New form factors for the enterprise

This isn’t strictly tied to PCIe 4.0, but it is somewhat of a byproduct of the higher speeds and thus higher power consumption of the newer drives.

Traditionally, servers used internal M.2 drives, the 22110 length had some datacenter-focused drives with PLP and the usual enterprise-grade feature set and there were HHHL options for high-performance accelerator cards, such as the Samsung PM1725 and the Intel P4618. There were others, but these utilized eight PCIe lanes and they were more performant than most M.2 drives. For hot-swappable storage, there was U.2, which was compatible with backplanes and could accomodate 7 to 15mm drives, which covered low power capacity-optimized drives, as well as high-performance ones that used as much as 25W of power.

This was all pretty good for the time, but then AMD’s EPYC CPUs got released. Those CPUs could handle 128 PCIe lanes, which meant that now we had a lot more lanes that we could wire things to. One CPU was able to handle more four-lane PCIe SSDs than what the manufacturers could fit into the 2.5″ drive bays on a 2U server, let alone 1U solutions. So they needed something better. In Q3 of 2019, the second-gen AMD EPYC lineup was released with support for PCIe 4.0, meaning even faster drives and even more heat. As of 2023, we have CPUs with 128 PCIE gen5 lanes per socket, providing unprecedented I/O bandwidth for SSDs and other devices.

The 2.5″ form factor that is used by both U.2 and U.3 drives of varying thicknesses was not designed with SSDs in mind. It was good enough for SATA drives, but it has reached its limits and the only reason to use it now is backwards compatibility.

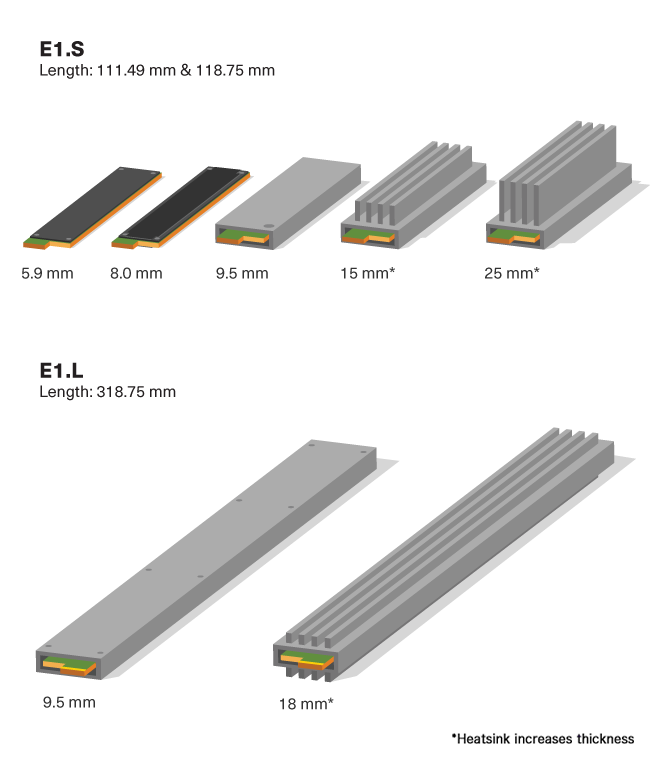

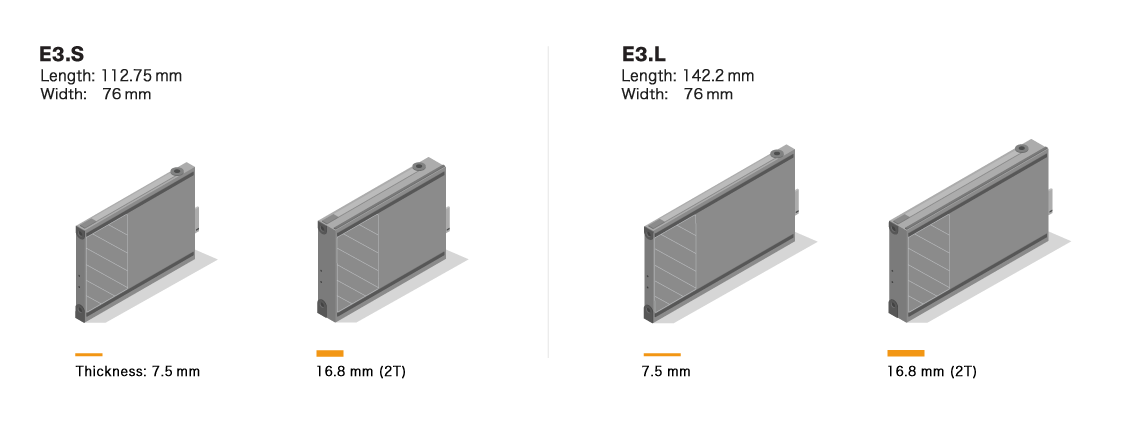

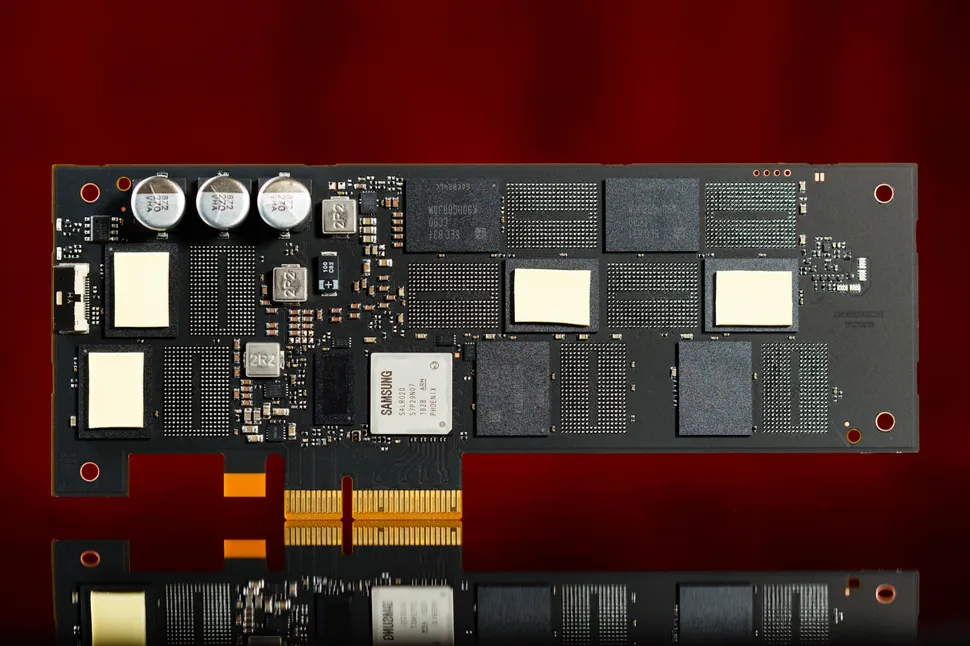

Newer drives need more power to provide faster speeds, access to more PCIe lanes and they should utilize the 1 and 2U server chassis more efficiently. These were the requirements for the new form factors, which are E1.S, E1.L, E3.S and E3.L or commonly known as EDSFF.

The E1-series of drives is somewhat similar to m.2, but with higher power limits and some much longer options to house more NAND packages. The longer variants are commonly referred to as rulers, with good reason. As it can be seen below, there are options with varying thicknesses that can dissipate anywhere from 12 to 40W of power. These drives are designed to fit in a 1U chassis.

E3 drives are also available in two different lengths. These closely resemble the traditional 2.5″ form factor, but can be differentiated easily by the protruding edge connector. These can handle 25 to 70W of power and their other trick is that they can have multiple edge connectors, which can theoretically provide more than 16 lanes of PCIe connectivity to the host CPU, either for added speed or redundancy in dual port mode (such as 4 lanes each to two controller nodes). These drives fit a 2U chassis, although they can be installed in a 1U unit as well in a stacked horizontal configuration.

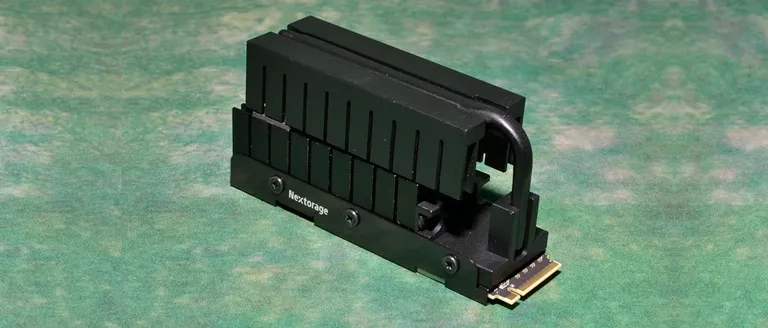

The bleeding edge of today

Today we have PCIe gen5 connectivity on client systems, which generally allows them to supply one 4-lane m.2 slot for a PCIe 5.0 SSD. The drives are available for purchase, although they are still of the very first generation of PCIe gen5 drives, mostly based on Phison’s PS5026-E26 controller54. The fastest of these could theoretically hit 14GB/s reads55, but the bottleneck is usually the NAND speed, as 2400MT/s NAND is still difficult to obtain, as only Micron, SK Hynix and YMTC are currently able to manufacture it at scale. These drives also run very hot, most of them supplied with very serious-looking heatsinks56 which are not only recommended, but required to use the drives for any amount of time.

New controllers with lower power consumption have already been announced57, but it takes time to incorporate them into products and bring them to the market. And until the availability of the required NAND flash improves, not many drives will be able to hit the 14GB/s mark, regardless to the controller they use.

As a novelty, China-based Maxio has announced controllers58, capable of delivering up to 14.8GB/s and also China-absed Yingren is working on a RISC-V-based controller59 to achieve simialr speeds.

Optane

The article wouldn’t be complete without Optane, also known as 3D XPoint, which was an alternative technology to NAND flash, developed by Intel and Micron and acclaimed for its consistently low latency and high IOPS performance. It was available in three main flavours for three very different market segments. The main differentiator is its incrfedibly high endurance and consistently low access times. Unlike NAND, which uses charges trapped in floating gates, XPoint takes advantage of the phase-change properties of a special alloy, Ge2Sb2Te5 which, if heated to different temperatures, changes its physical structure and with that, its electrical properties, i.e. resistance, which is how data is stored within these cells60.

Caching Drive

The Optane M10 was a low-performance 16GB or 32GB m.2 cache drive with a PCIe 3.0 x2 electrical interface. This was meant to be used as a caching drive alongside traditional SSDs on compatible systems. There was a similar solution, the Optane H1061, which paired the caching drive with some QLC NAND flash on a single m.2 device and handle caching using Intel’s Rapid Storage Technology software. These were available in 16/256GB and 32/512GB capacities where the former is the size of the optane cache and the latter is of the QLC NAND. These were later upgraded and the H20 series was released with capacities up to 32GB/1TB. These were rated at 1350/290MB/s and only used two of the four available PCIe lanes. Their main advantage was the 2-3x lower latency when compared to mainstream SSDs of the time (2017).

High-performance SSD

Of course, they made traditional NVMe drives as well with Optane memory, both in HHHL and 2.5″ U.2 form factors, using a 4-lane PCIe 3.0 bus. This does mean it won’t be competing with modern drives in terms of sequential speeds, simply because that interface limits it to roughly 3.5GB/s, but that is not the main value of Optane. Below is an Intel 905p62, the consumer variant of the drive in a capacity of 960GB that was released in Q2 2018. This particular drive is rated at 17.52PBW and contains a total of 1,120GB of XPoint memory.

Interestingly enough, the PCIe-based add-on cards did not seem to use additional DRAM cache, and the controller was an in-house Intel chip, as expected. According to Intel, the drive is capable of 2.6/2.2GB/s R/W speeds and 200k/280k IOPS. However, the most significant gain compared to NAND flash based SSDs is in the latency. At low queue depths, the 905p shines and is several times faster than other drives63.

The DCPMM

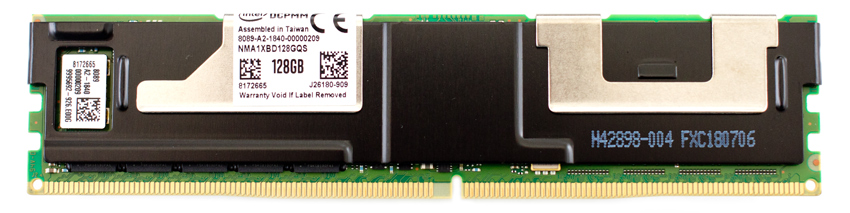

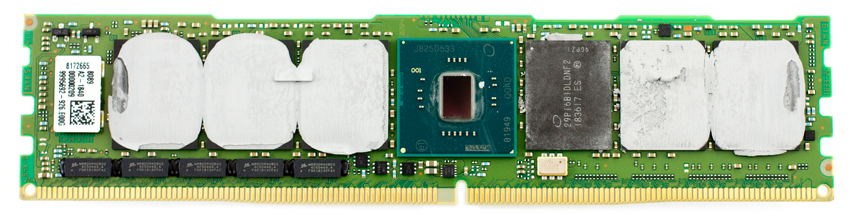

The DCPMM was arguably the most interesting concept. They used a DDR4 memory stick and slapped on some XPoint memory chips with a custom controller that actually used the DDR4 memory interface to talk to the host CPU. This further decreased the latency, as the data didn’t need to go through the PCIe controllers and driver stack. These were compatible with some of Intel’s Xeon Scalable processors with the lga3647 socket and could either be addressed as additional RAM or set up as non-volatile block storage. These look just like a DDR4 DIMM module with a heatsink on it. It was available in capacities of 128, 256 and 512GB

Rated at 6.8/1.85GB/s for the 128GB module, 6.8/2.3GB/s for the 256GB variant and 5.3/1.89GB/s for the 512GB variant64, they are much faster than the PCIe variants. The endurance is rated at 292/363/300PBW respectively. They use 15-18W, which is quite a bit for a DIMM module, especially for DDR4.

The second and third generation modules managed to improve on these numbers, going as high as 10.55/3.25GB/s for the third-gen 256GB variant which used a DDR5 interface. These came very late and were discontinued shortly after being announced, so I don’t think there were many systems sold with them integrated.

Samsung 983 ZET

Of course, Samsung wouldn’t want to miss the party, so they also announced a contender for the race of building a low-latency drive and it became the Samsung 983 ZET. No, it doesn’t use any shiny new technologies, like XPoint, instead Samsung went back to the roots and decided to utilize 48-layer V-NAND flash in SLC mode. This does give them much faster read/write speeds on a per-NAND-package basis and also benefits from the added endurance.

The 983 ZET was officially rated at 8.5DWPD and 10DWPD65 for 5 Years for its 480GB and 960GB capacities, which when converted to TBW values, give us 7.446PBW and 17.520PBW. Comparing that to the P4800X’s 20.5PBW and 41PBW for its 350GB and 700GB capacities, it is still not quite there, but it’s probably enough for a caching drive, even in a server.

The image above is of the 960GB drive, and while the unpopulated packages suggest that the PCB was designed to accomodate more NAND flash for higher capacity drives, the 983 ZET topped out at 960GB. My guess is that Samsung re-used the same PCB for the SZ98566, which scaled up to 3.2TB. There is some more info on it here. These used more over-provisioning and were rated at 30DWPD. It is likely that the 960GB 983 ZET could be re-configured to an 800GB SZ985. The controller seems to be rather small and for the 983 ZET, it had a single 1.5GB package of DDR4 RAM for caching.

It was rated at 3.4/3.0GB/s speeds and 750k/60k IOPS, which is very respectable. Its latency was much better than that of ordinary SSDs and in sequential reads and writes it was actually faster than the Optane SSD 905P, although it couldn’t dethrone it in random r/w performance.

Leave a Reply