PCI-Express

I would like to explain some of the terminology before I get to the examples of PCIe drives, as there are a number of things which aren’t immediately clear when we talk about PCIe SSDs.

First, NVMe is a protocol and not an interface. Early consumer PCIe SSDs used the AHCI protocol, which allowed motherboards that didn’t have NVMe support to boot from them. They contained their own PCIe AHCI “driver” that was loaded by the BIOS/UEFI, just like it is for any PCIe device and presented the drive as a bootable device. NVMe drives do not have this and require support from the BIOS in order to boot. They still work perfectly fine as a data drive without BIOS support, in virtually any PCIe slot. The enterprise-focused solutions usually required specific drivers to work and they may or may not be bootable.

Second, there were drives, so-called “flash accelerators”, like the Oracle F80, which were essentially four SATA SSDs with a SATA controller on a PCIe card. While this is technically a PCIe device, the flash controller itself did not have native PCIe support. The path of the data was a bit like this:

CPU -> PCIe -> PCIe-to-SATA controller -> SATA-based SSD controllers

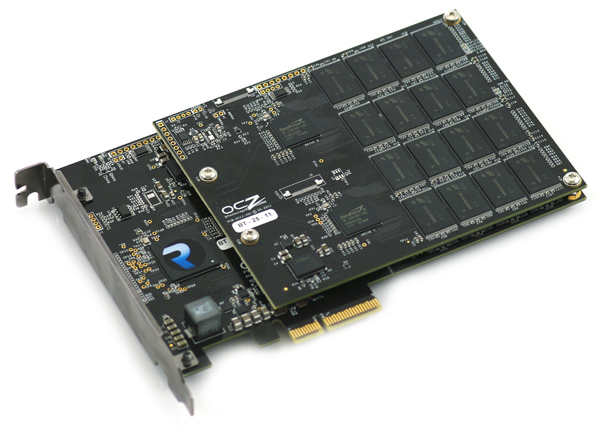

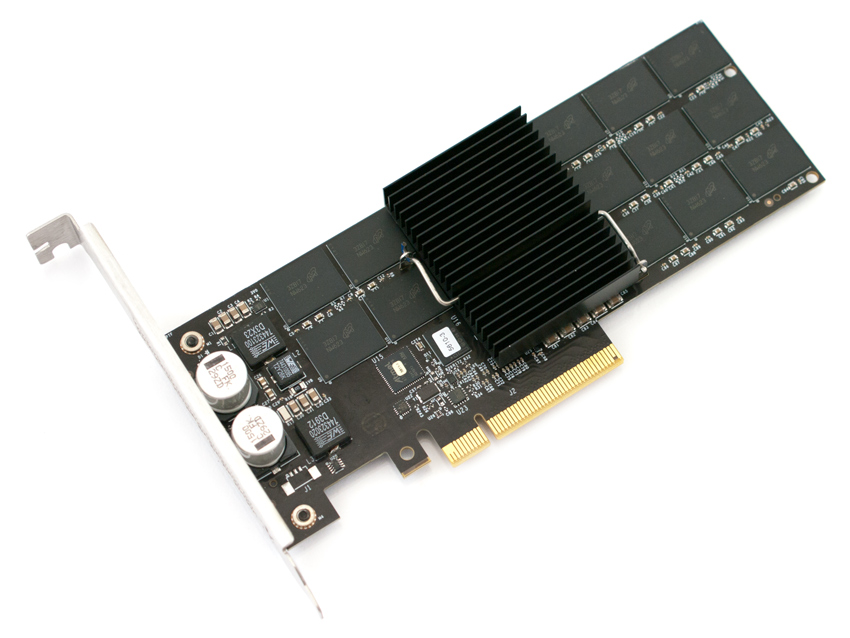

While this was undeniably faster than a single SATA drive, it was essentially a RAID0 (or some other RAID level) array of a number of SATA drives. OCZ also created the RevoDrive, which was essentially a number of standard SATA SSD controllers, connected to a SATA or PCIe bridge. The below is an example of this, a 480GB OCZ RevoDrive 3 X220 with four SandForce SF-2281 controllers and a quad-port SATA controller.

There was another interesting, although very short-lived concept from OCZ, the RevoDrive Hybrid21, but with a hard drive that used the SSD as a caching tier. I’m not too sure about the software used here, but it had three separate SATA drives (2 SSDs + HDD) on a single card with software that handled the caching in the background.

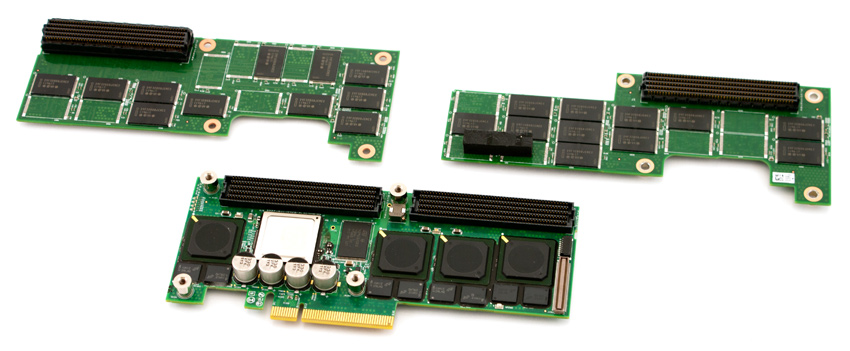

Intel’s entry in Q3 2012 was the SSD 91022, which was yet another quad-drive setup, but it used SAS for the individual drives with an LSI SAS2008 PCIe to SAS bridge. This did have the advantage of better driver support, but not much else. The speeds of the larger, 800GB model topped out at 2/1.5GB/s and 180K/75K IOPS. Nevertheless, the card-sandwich looked very impressive.

The four controllers are clearly visible on the base card with the PCIe edge connector, while the flash memory was moved to two daughterboards, stacked on top when assembled.

Native PCIe drives

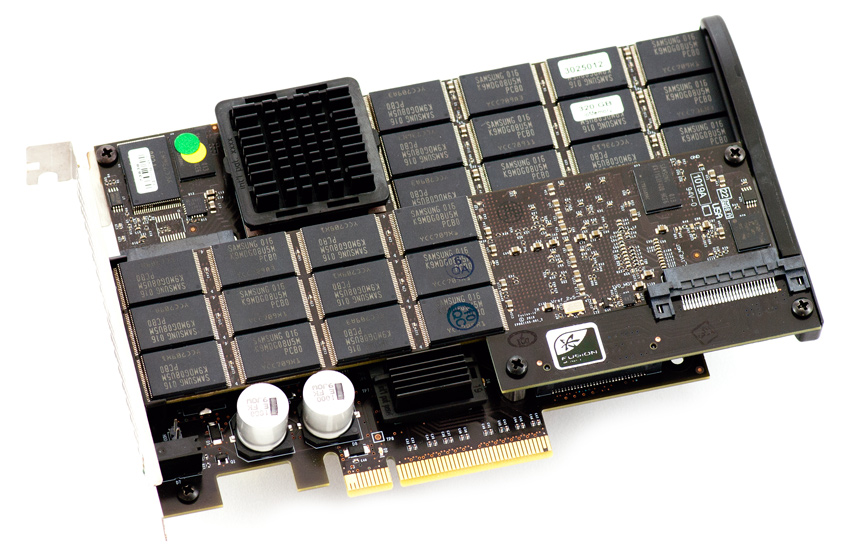

The earliest native PCIe drives were meant for the datacenter, simply because they often required separate drivers and were often non-bootable. One of the most prominent names is Fusion-io. They pioneered the PCIe SSD, calling the ioMemory and ioDrives.

The ioDrive Duo was one of the earliest PCIe drives in Q3 2012. It used FPGAs that interfaced the NAND flash directly with a PCIe bus instead of an off the shelf SATA SSD controller. It was available in SLC and MLC versions with capacities ranging from 320GB to 1.28TB. The fastest variants were as fast as 1.5/1.5GB/s23 with 252,000/236,000 IOPS. It has two PCIe-based flash controllers and a PCIe switch that connects these to the host. They OS had direct access to the NAND and it was their added software layer that enabled the addressing of the NAND as a block device.

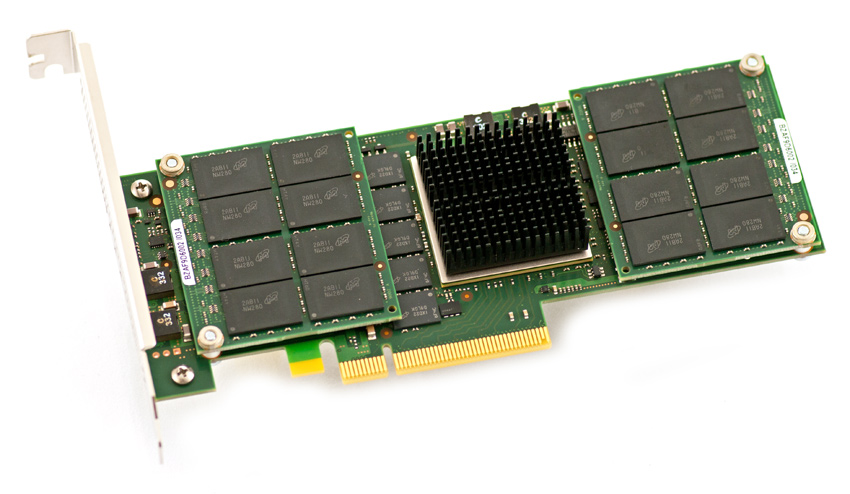

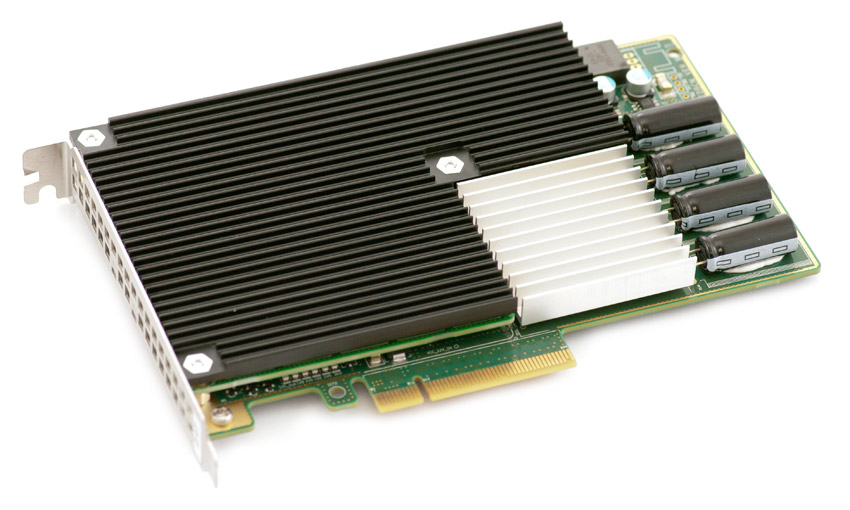

Micron took a different, and possibly better approach with their P320h24 that was available in 350GB and 700GB capacities in Q3 of 2012. They built a single, large controller, paired it with 2GB of DDR3 RAM and 1TB SLC flash for the 700GB variant, giving us 30% of spare area. The controller has 32 NAND channels25, four times as many as the usual SATA drives. It connected to the host using an x8 PCIE 2.0 link. The performance was outstanding at 3.2/1.9GB/s and 785K/205K IOPS, which is on par with or even higher than many of today’s PCIe drives.

The controller itself is huge with 64 NAND packages on the card and two extra carrier boards, with a capacity of 16GB each for a total of 1TB. The drive also supports RAIN (redundant array of independent NAND), which is essentially a RAID5 configuration across the NAND chips in groups of 7+1. This protects the data in the event of a NAND failure.

In Q3 of 2014, the 3rd and last generation of Fusion ioMemory drives were announced with capacities of up to 5.2TB. All drives used 20nm MLC NAND and were reasonably fast at 2.7/1.5GB/s and 196K/320K IOPS. Yes, the IOPS was higher for the writes than the reads. It used the same FPGA-based architecture as the earlier drives.

And last, another very exotic construction from Q3 2013, the Huawei Tecal ES300026. It used not one, not two, but three controllers. And no, it wasn’t two PCIe controllers with a PCIe switch. They were all identical Xilinx Kintex-7 XC7K325T FPGAs with their own RAM pool and MLC NAND. These FPGAs still cost around £2000 each today. One of them interfaced with the host using 8 PCIe 2.0 lanes and used two 10G SERDES connections to the other two FPGAs. They were connected to 17 NAND channels and utilized a RAID5 engine, similar to Micron’s RAIN. The controller could, on the fly, change the NAND configuration from 17 to 16 or 15 channels if one or more NAND chips failed, increasing the service life of the drive.

The available capacities were 800GB, 1.2TB and 2.4TB. The largest model was capable of 3.2/2.8GB/s and 770K/630K IOPS. These are very impressive numbers, but for an MSRP of $10k+ and 60W of power consumption, I would expect nothing less. It wasn’t actually marketed as an SSD, it was an “Application Accelerator”.

The Samsung XP94127 was a much more familiar looking drive, released in Q2 2014. It used a native PCIe controller (Samsung S4LNO53X01) with AHCI, along with their own 19nm MLC flash and RAM. The host could communicate with the drive using four lanes of PCIe 2.0. The speeds were a big jump from the SATA III drives at the time, as it was rated for 1170/950MB/s and 122/72K IOPS. It was available in 128/256/512GB for $230/$310/$570.

It also used the M.2 or then-NGFF card format at its 2280 variant, which essentially is the size that most modern drives use.

It looks just like any other modern SSD and was the fastest consumer drive on the market when it was released. Interestingly enough, Samsung used planar NAND, even though it had V-NAND in the 850 Pro just a quarter later, but perhaps the packaging technology wasn’t mature enough and they wouldn’t have been able to pack enough of that in just two packages to reach the desired capacity point of this drive, or the controller was developed separately and wasn’t compatible with 3D NAND.

Leave a Reply