Category: Cloud

-

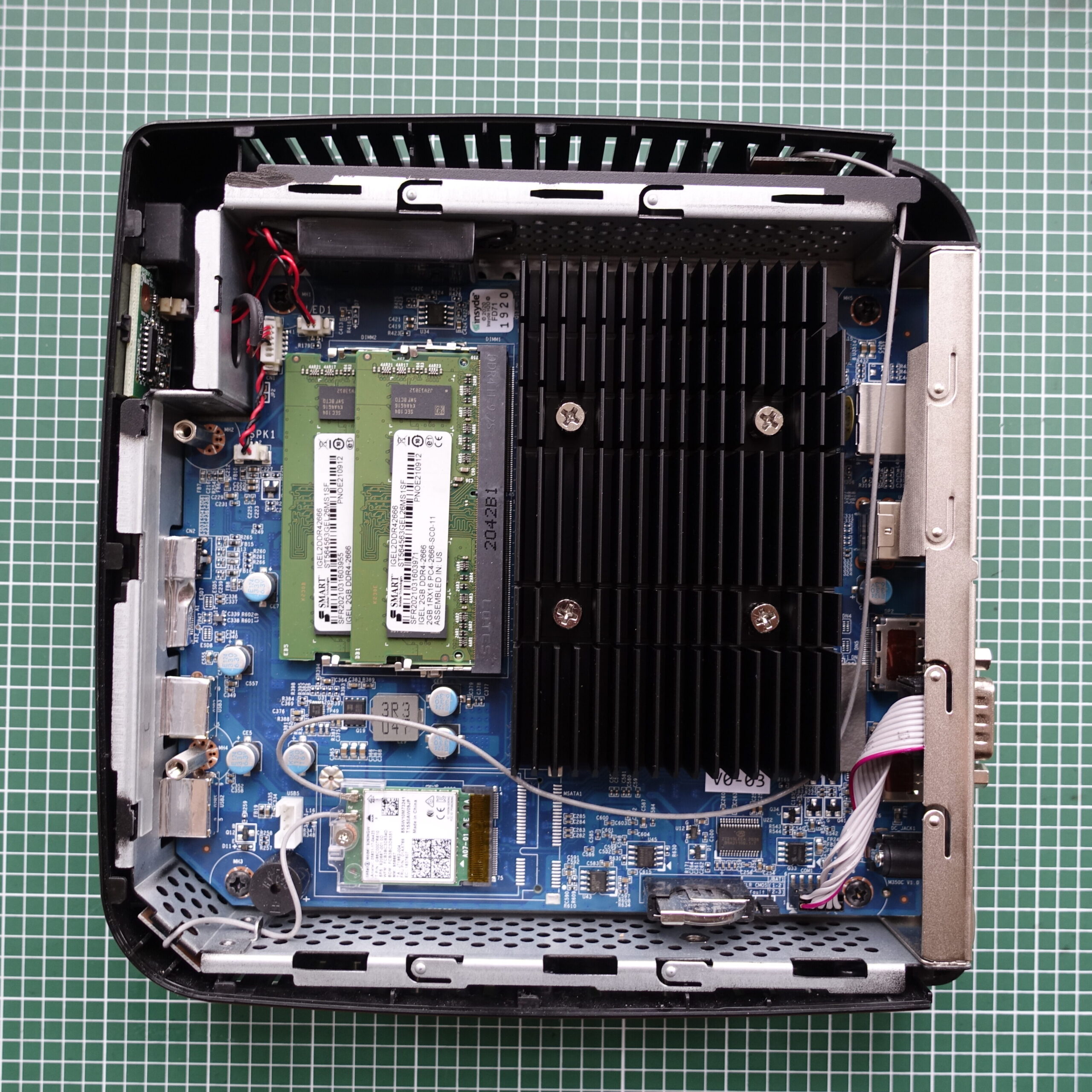

“thin” servers from Thin Clients

The hardware I managed to snatch two Igel M350C thin clients off of ebay for a very reasonable ~$45 per piece, shipped. Which doesn’t sound all that impressive, but these actually have AMD Ryzen Embedded R1505G CPUs. Ryzen Embedded R1505G is a 64-bit dual-core embedded x86 microprocessor introduced by AMD in early 2019. This processor is based on AMD’s Zen microarchitecture and is fabricated on a 14…

-

Deploying WordPress on ORACLE Cloud

This is a guide on how to deploy WordPress (or any other container-based service) on ORACLE Cloud and set up a URL with a Dynamic DNS provider. You could use the guide for a Teamspeak or Minecraft server as well. We’ll use a free-tier ARM-based VM, which utilizes their Ampere Altra 80C CPU. The free-tier…